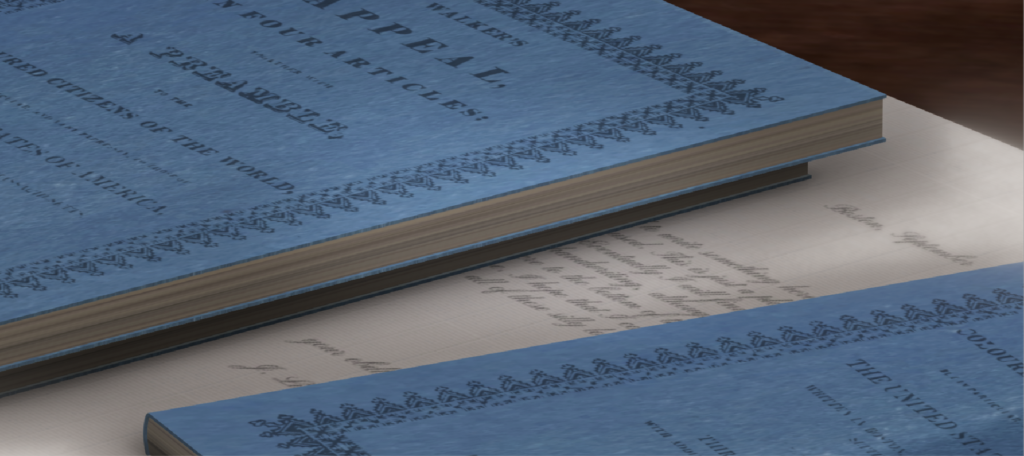

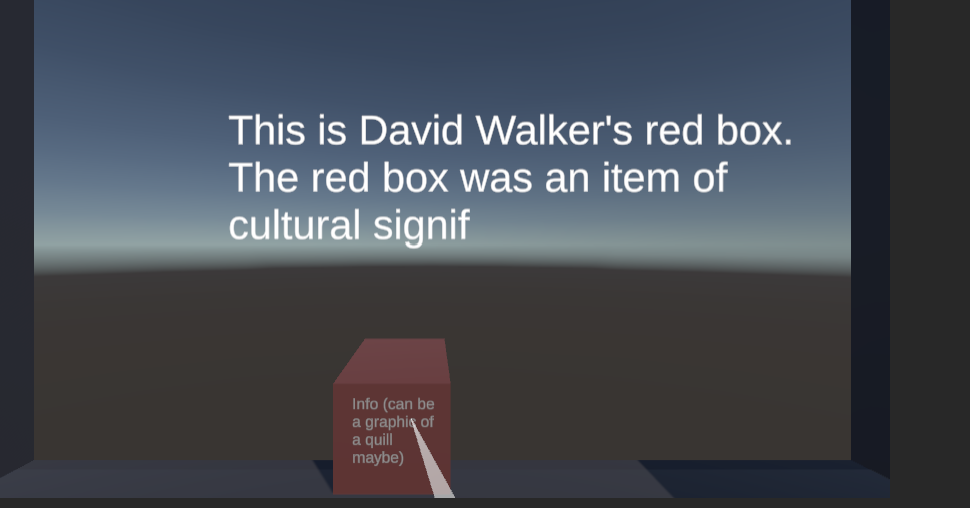

Over the past month, I’ve been developing the project UI/UX (navigation, captions) within Unity’s XR system. My final goal is to create controls that allow the user to move around the house, traverse between floors and gather information about different objects. To enable locomotion, the navigable portions of the floors are made into teleportation areas that allow for free movement throughout the scene. Free movement is more immersive than a teleportation anchor system consisting of fixed points. For computational efficiency, we want to have each floor of the house be contained in a different “scene” in Unity, with users interacting with the staircase (for example) as a way of moving between spaces. For object interactions, I want users to be able to hover over an object to see if it is interactive, displaying an icon in front of it, along with maybe a sound effect. Once an object is selected, curatorial captions will appear as if someone is “typing” across the screen. If the object is selected once the captions have triggered, the text disappears. Any subsequent time the same caption is activated, the user will not have to wait for the text to type, and will instead see the description in full. In a test scene, I have been able to get the teleportation area and hover icon working.

I’ve also gotten the text to appear letter by letter on the screen when objects are selected, but the user has to hold the button down for the duration of the display.

In the next few weeks I will improve the design of these UX elements by using better graphics and typefaces. I also plan to add a teleportation reticle and the aforementioned sound effects. I hope to find a way to have the information text be triggered on selection and then disappear on the next selection which is a more intuitive progression.

Through this process, I’ve had to write a few scripts which have been a challenge as someone with minimal coding experience. As I refine the UX more, I want to learn to create more complex scripts to achieve the best end result.